Lamba Deployment with minimal Yaml configurations

Abstract

AWS Lambda has gained significant traction among developers, offering a preferred alternative to traditional containers or physical and virtual servers. This serverless model frees developers from the burdens of infrastructure maintenance, allowing them to focus solely on writing code.

Simultaneously, Kubernetes has become the leading standard for organizations managing containerized applications. By leveraging Kubernetes to create a Control Plane for an Internal Developer Platform, we can streamline the deployment of AWS Lambda functions. This method lets developers deploy with familiar tools, avoiding the need to learn new languages such as Terraform (HCL) or CloudFormation. Most developers are proficient in YAML, which ensures ease and efficiency.

Introduction to Platform Engineering and Developer Services

In recent years, Platform engineering roles have emerged within companies, distinguishing themselves from traditional engineering roles. Platform engineers are crucial in supporting internal teams, and their success relies heavily on standardization and automation. This enables the Platform Engineering team to serve the entire organization effectively with limited personnel.

A Developer Service provides tools and frameworks that simplify and automate development tasks, allowing developers to focus on writing code rather than managing infrastructure.

This blog will explore how to provide a Developer Service for building Lambdas using GitHub Workflow and deploying them with ArgoCD and Crossplane.

Before proceeding, let’s clarify some of the services/tools we’ll be discussing:

Prerequisites:

- Kubernetes Cluster

- ArgoCD and Crossplane are installed in your cluster.

- GitHub and AWS account.

Considerations (Tool versions used in this blog):

- Kuberntes Cluster (EKS): v1.29

- Argocd: v2.9.6

- Helm: v3.13.2

- Crossplane: v1.14.3

- GitHub: Web

Architecture

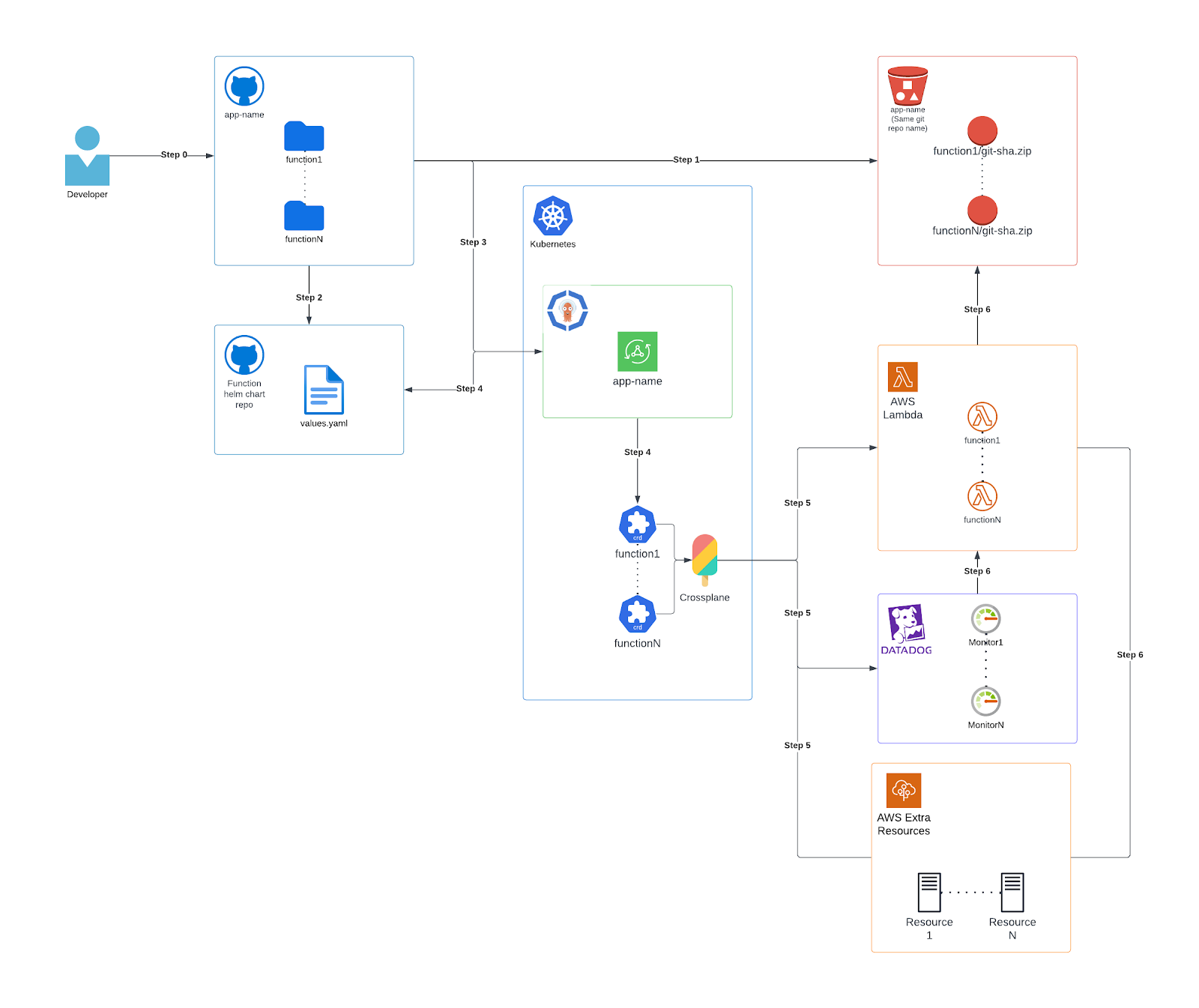

Before diving into implementation details, let’s take a moment to explore the architecture. Please refer to the diagram below:

Step 0:

This marks the beginning of the CI/CD process, initiated by the developer’s code commit, which triggers the GitHub workflow, performing essential tasks (explained below).

Step 1:

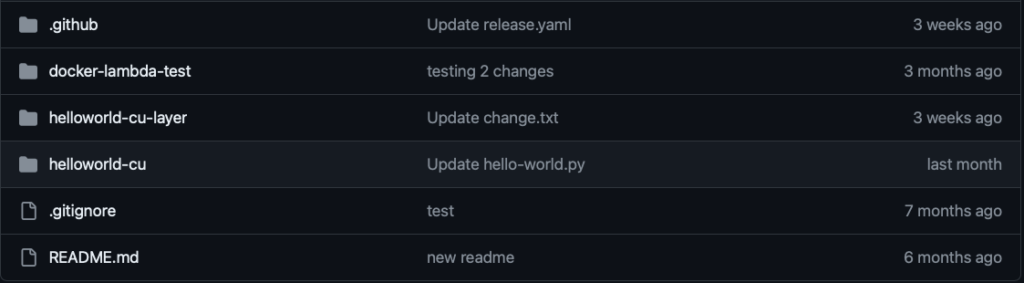

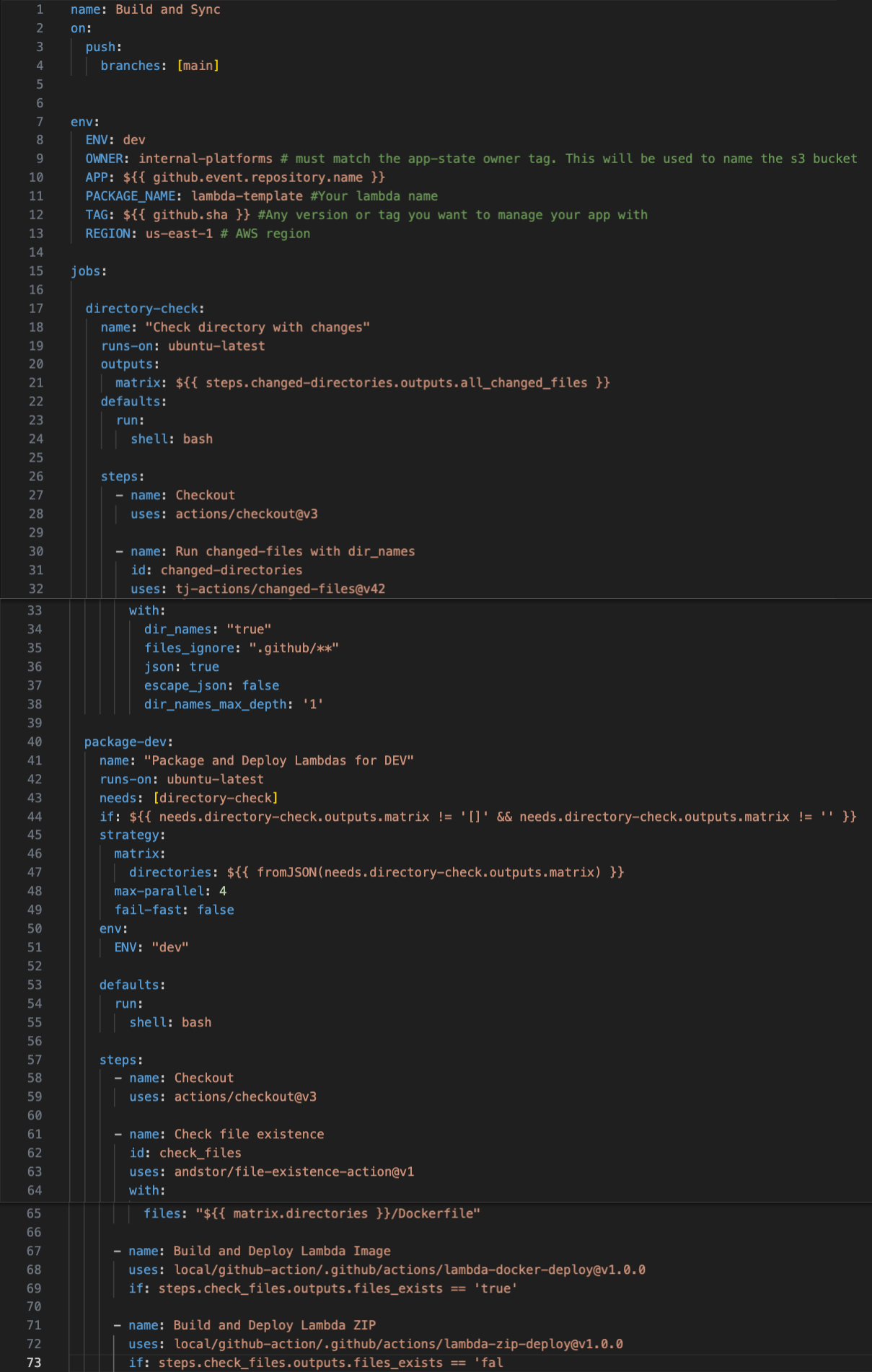

Once GitHub receives the code commit, the workflow initiates. It identifies the changed directory and determines if it contains a lambda of type zip or image (validated by the presence of a Dockerfile, as shown on line 17 in the Workflow below). For zip-type lambdas, it packages and deploys a zip file to an S3 bucket. Alternatively, it builds the Docker image and deploys it to an ECR (or any preferred Docker registry).

Refer to the Workflow code below for these steps.

In the depicted workflow, two local actions are utilized (lines 67 and 71): one for deploying the container image to ECR and the other for deploying the zip file to an S3 bucket. Each action executes only if the conditional in the step evaluates to true. For instance, if a Dockerfile is present in a directory, the workflow will execute solely the step for “Build and Deploy Lambda Image”; otherwise, it will execute solely the step for “Build and Deploy Lambda ZIP.”

Step 2:

Update the Helm chart values for the lambda to reflect the new build version. This operation occurs within one of the local actions, where we download the Helm chart and modify the version in the values.{env}.yaml file.

Step 3:

This step involves syncing the ArgoCD application to deploy the modifications made to the Helm chart.

Step 4:

Upon synchronization, the ArgoCD application retrieves the Helm chart from the GitHub repository.

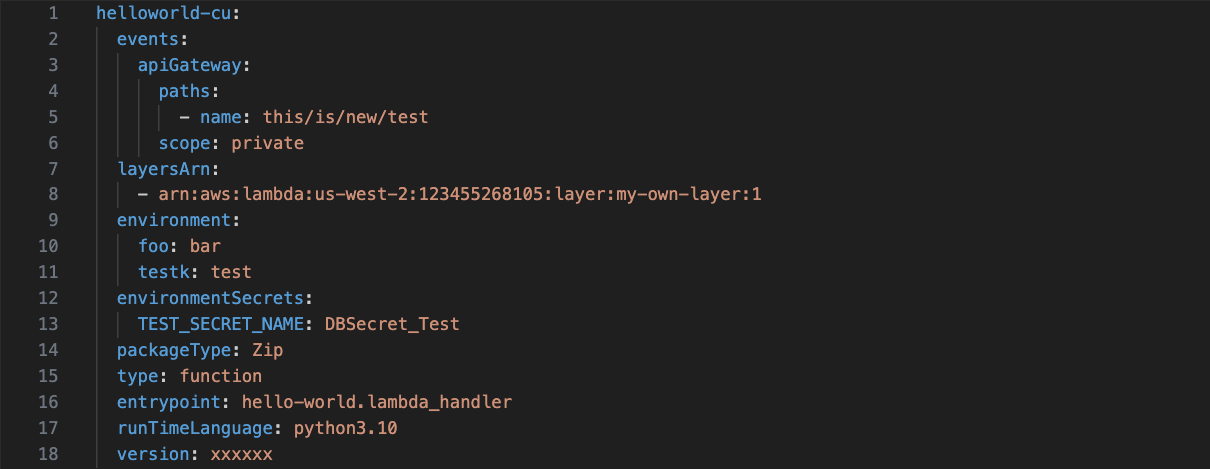

ArgoCD then proceeds to deploy the requisite resources based on the configurations specified in the values.{env}.yaml file.

For example:

This configuration deploys a Lambda function of type ZIP, indicating that the code resides in an S3 bucket rather than an ECR. The Lambda executes Python 3.10 code and is configured with custom environment variables. Additionally, it is exposed through an API Gateway. To integrate Lambda with the API Gateway, deploying all required configurations and resources is essential.

To facilitate this integration with the API Gateway and the Lambda, you must create the following resources using the API Gateway provider:

- Integration

- Resources

- Method

Note: There is a known bug affecting the parentIdSelector when working with the Resource kind for the API Gateway provider. Further details about this issue can be found here.

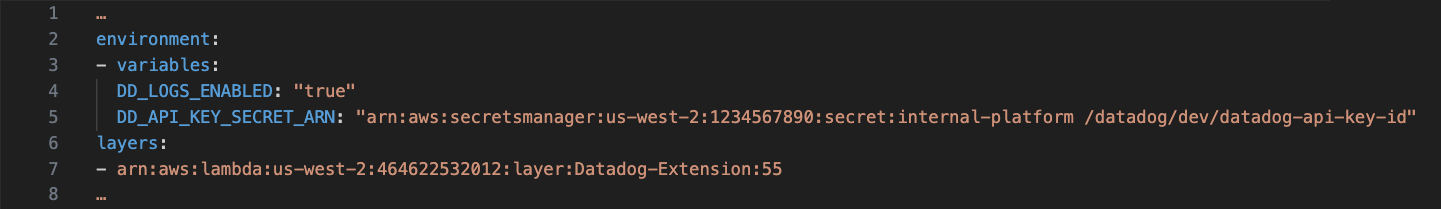

As highlighted earlier, our objective is to uphold best practices in developers’ workflows. One instance is our requirement for Lambdas to incorporate Datadog. By default, all our Lambdas come with Datadog pre-configured and default Datadog monitors.

Refer to the code below for details on how we integrate the Lambda with Datadog:

The configurations in the lambda resource are hardcoded and not included in the values.{env}.yaml. Instead, they are part of the lambda template file within the Helm chart.

For further details on Lambdas, API Gateway, and Datadog resources, please consult the documentation corresponding to the providers and versions specified above (refer to the Considerations section).

Step 5:

Utilizing the Kubernetes Resources (Crossplane providers’ Custom Resource Definitions) deployed by ArgoCD, Crossplane processes and deploys these resources into AWS and Datadog, including the Lambda, IAM Role, IAM Policy, API Gateway Configuration, Datadog Monitors, and more (These are the AWS extra resources section in image 1).

Step 6:

The Lambda is deployed and configured to access the code stored in an S3 bucket with a specific version. Additionally, all Datadog Monitors are deployed and actively monitor the Lambdas. Also, in the case of configuration, we deploy extra resources to support the Lambda function.

Note: If you have a Lambda of type Image rather than ZIP, the steps remain largely the same, with the only differences being the creation of a Docker Image and its deployment to a Docker Registry (ECR). The Lambda is then configured to utilize this image instead of a ZIP file in an S3 bucket.

Conclusion

As emphasized in this blog, Platform Engineers are responsible for delivering developer-friendly, functional, secure, and best-practice-aligned services.

How do we achieve this?

- Easy to use: We leverage familiar tools like Helm and Kubernetes to provide a service that developers already understand. Essentially, we supply them with a customized YAML configuration file to meet their needs.

- Functional and Best-Practice Implementation: Our service incorporates various functionalities to eliminate the necessity for manual intervention or alternative procedures. For instance, Datadog Monitoring is automatically activated, and Lambdas are seamlessly exposed via an API Gateway.

- Secure: Utilizing ArgoCD and Helm, we enforce developers to conduct all modifications exclusively within the values.{env}.yaml file, preventing any manual alterations. They are restricted from accessing the template files and solely manage the values.{env}.yaml. The template files are the core of a helm chart since they contain all the logic and relationships between the resources created.We only enable the config files to avoid any misconfiguration or security issues that might be produced because they need more knowledge in this domain (Kubernetes and Third-party resources). Developers do not need to be experts in this; we do not expect them to be. We only expect them to understand and configure their application needs in a YAML file. As platform engineers, we are responsible for the how, using the config value they provide.In the event of a manual adjustment to a Crossplane Managed Resource, Crossplane will automatically revert it to its state in Git, maintaining a record of all changes. This practice ensures that all modifications are carried out through Git, providing a robust auditing tool to track the who, when, and where of each change (GitOps).

Why not Terraform?

You might wonder, why not opt for Terraform, a well-established and mature tool? While Terraform can achieve similar outcomes, adopting it sacrifices two key benefits: automation and governance. As outlined earlier, manual changes are prohibited since Crossplane automatically rectifies them; this self-healing feature is unique to Crossplane and lacking in Terraform.

Additionally, building a platform with Terraform necessitates developers to learn a new language, HCL. In contrast, Crossplane utilizes YAML, a format already familiar to developers for Kubernetes workloads or any configuration file they’ve encountered throughout their careers; YAML is both intuitive and extensively utilized.

We only enable the config files to avoid any misconfiguration or security issues that might be produced because they need more knowledge in this domain (Kubernetes and Third-party resources). Developers do not need to be experts in this; we do not expect them to be. We only expect them to understand and configure their application needs in a YAML file. As platform engineers, we are responsible for the how, using the config value they provide.

Carlos Nasser

Carlos Nasser is currently contracted as a Senior Platform Engineer at Collectors through Caylent (https://caylent.com/), a leading consultancy firm renowned for its expertise in cloud solutions and digital transformation.

Carlos Nasser is pivotal in assisting Collectors in building an Internal Developer Platform. With seven years of experience in the tech industry, he has sharpened his skills in Kubernetes, CI/CD pipelines, and AWS, demonstrating a deep commitment to leveraging cutting-edge technologies to drive business efficiency and innovation. His technical skills and strategic approach have been instrumental in delivering robust cloud solutions and optimizing infrastructure for high performance and scalability.

Beyond his professional achievements, Carlos enjoys a well-rounded life. He likes playing squash and video games, having quality time with family and friends, watching sports, and learning new things.